Want to post some food for thoughts after discussing with @MyriamBoure about her work with the Data Food Consortium initiative and how it can relate to the future of OFN.

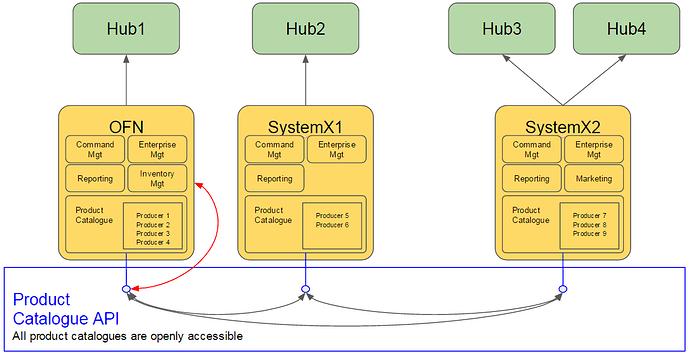

One the objectives of the Data Food Consortium is that food platforms would make their data available via a set of API services using the same representations of the world. Example: each platform would make their product catalog available (many benefits, producers need to manage their products only in one platforms for instance).

(Note the arrow in red where OFN itself accesses the product catalogue only and only via the API)

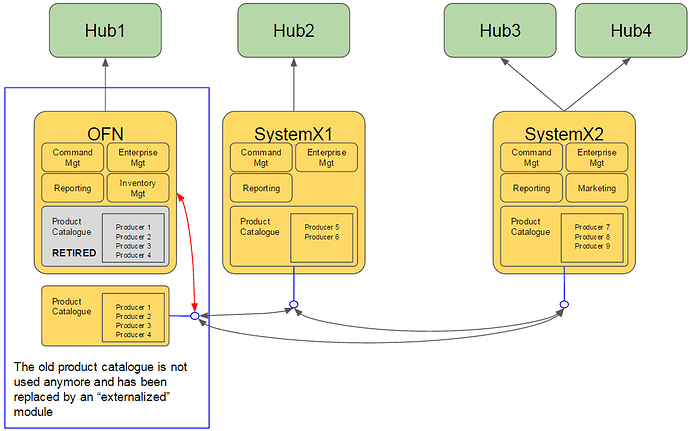

This could be the opportunity to migrate OFN towards a more modular, so called microservice architecture. How? Well, one of the nice consequences of abstracting the behavior of a system into services is that once the services are defined and used, one can modify the internals of the system without impacting the outside world - provided the services are not modified. And this can be done at the same granularity as the services themselves, functionality by functionality. No need to re-write everything from scratch

Concrete example: in the diagram above one could re-write the “product catalog” functionality into an independent application (=microservice) that would then serve the client food platforms, including OFN. Step by step the entire set of OFN’s functionalities can be refactored into modular independent applications.

Another example is for some of the functionalities to be implemented for LP in France: the new UI module that will be used by producers to manage their orders could be a separate “microservice” interacting with the rest of OFN via APIs, instead of being developed part of the OFN core stack.

Some pre-requisites:

- the API interfaces breakdown, focusing on main data entities

- microservice breakdown: each should own their own data

- the technologies and tools to be used - this is more complex to manage and without proper tooling, chaos follows.

). It could definitely be helpful to isolate some services, but it won’t directly help the consortium into creating a product description standard.

). It could definitely be helpful to isolate some services, but it won’t directly help the consortium into creating a product description standard.